About early computers

The early computers were a far cry from the sleek, sophisticated devices we use today. They were massive machines, often taking up entire rooms, and were incredibly expensive to build and operate. Despite their limitations, however, these early computers played a crucial role in laying the groundwork for the technology we use today.

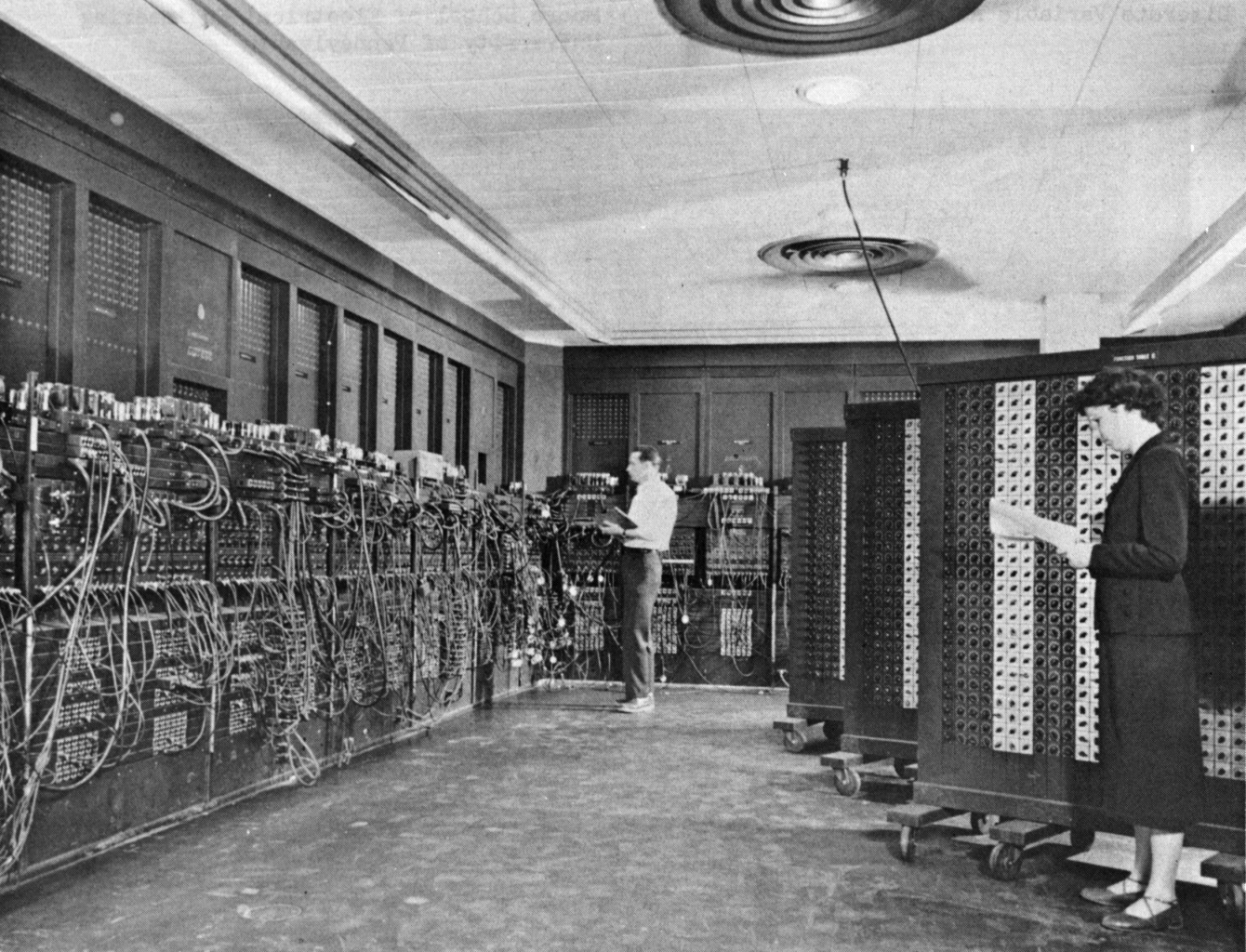

One of the earliest computers was the Electronic Numerical Integrator And Computer (ENIAC), which was built during World War II to help calculate artillery firing tables. The ENIAC was an impressive feat of engineering, with over 17,000 vacuum tubes and weighing in at over 30 tons. It was capable of performing 5,000 calculations per second, making it the fastest computer of its time.

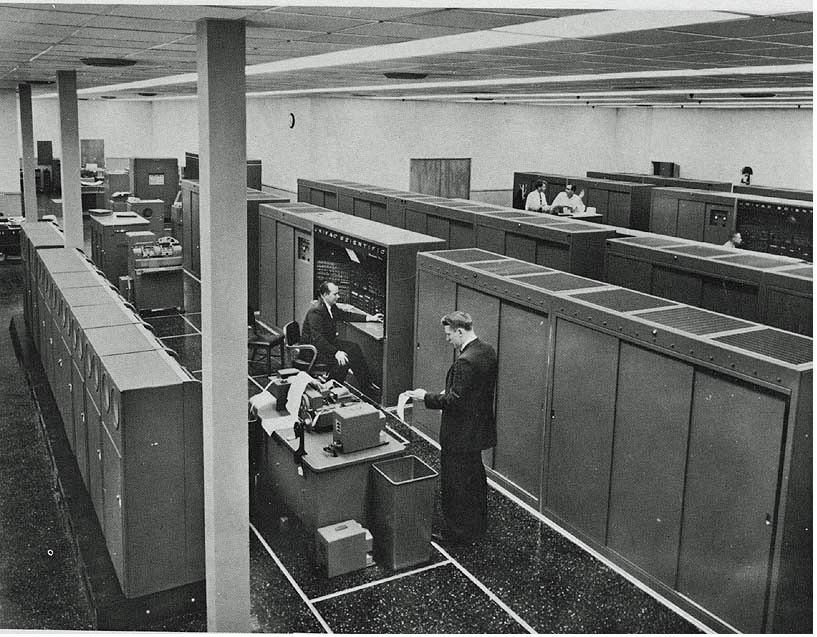

Another early computer was the UNIVAC, which was developed in the 1950s and was one of the first computers to be used for commercial purposes. It was used by the U.S. Census Bureau to process data from the 1950 Census, and was later used by businesses and government agencies for a variety of applications.

Despite their impressive capabilities, these early computers were incredibly expensive to build and operate. The ENIAC, for example, cost around $500,000 to build (equivalent to over $7 million today), and required a team of technicians to maintain it. They also required a lot of power, with the ENIAC consuming around 150,000 watts of electricity, equivalent to the power consumption of about 150 modern homes.

Minicomputers

Minicomputers started to be developed in the 1960s and were designed to be a more affordable and accessible alternative to earlier computers, which were expensive and only accessible to large organizations and institutions.

One of the key features of minicomputers was their ability to support multiple users and multiple programs simultaneously. This made them more versatile and efficient than microcomputers, which could only support one user and one program at a time. Minicomputers also had larger and faster memory systems, as well as more powerful processors, which allowed them to handle more complex and demanding tasks.

Microcomputer revolution

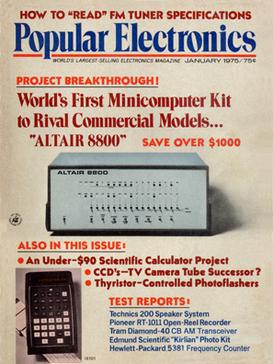

The development of smaller and more affordable microcomputers allowed individuals and households to have access to computing power for the first time.

The first microcomputers were developed in the 1970s and were based on the microprocessor, a small and affordable central processing unit (CPU) that could be mass produced. These early microcomputers, such as the Altair 8800 and the Apple I, were often sold as kits that required users to assemble and program the computer themselves. Despite their limitations, these early microcomputers were extremely popular with hobbyists and tech enthusiasts.

The 1980s saw the rise of more advanced and user-friendly microcomputers, such as the IBM PC and the Macintosh. These computers featured improved hardware and software, as well as a graphical user interface (GUI) that made them more accessible to a wider audience. The proliferation of these personal computers led to the growth of the home computer market, as well as the development of new industries and applications for computing technology.

Conclusion

Despite their limitations, however, the early computers were an important step forward in the development of modern computing technology. They paved the way for the development of more advanced and powerful computers, and laid the foundation for the technology we use today. The early computers may seem primitive by today's standards, but they were a crucial step in the evolution of modern technology.

Read more blog articles Browse JavaScript projectsAbout codeguppy

CodeGuppy is a FREE coding platform for schools and independent learners. If you don't have yet an account with codeguppy.com, you can start by visiting the registration page and sign-up for a free account. Registered users can access tons of fun projects!

Follow @codeguppy on Twitter for coding tips and news about codeguppy platform. For more information, please feel free to contact us.